Sagiri: Exposure-Limited Image Enhancement with Generative Diffusion Prior

Baiang Li1,5

Sizhuo Ma3

Yanhong Zeng1

Xiaogang Xu2,4

Zhao Zhang5

Youqing Fang1

Jian Wang✝3

Kai Chen1✝

✝Corresponding authors.

1Shanghai AI Laboratory

2The Chinese University of Hong Kong

3Snap Research

4Zhejiang University

5Hefei University of Technology

[Paper]

[Github]

[BibTeX]

What can Sagiri do?

Input

After restoration model

Restoration model + Sagiri(On entire image)

Input

After restoration model

Restoration model + Sagiri(With region selection)

Input | After restoration model

Restoration model + Sagiri(With prompt a)

Restoration model + Sagiri(With prompt b)

Prompt a: `A building with a red brick exterior, white columns, and a black door...'; Prompt b: `A building with a black brick exterior, white columns, and a red door...'. Please zoom in to see more details.

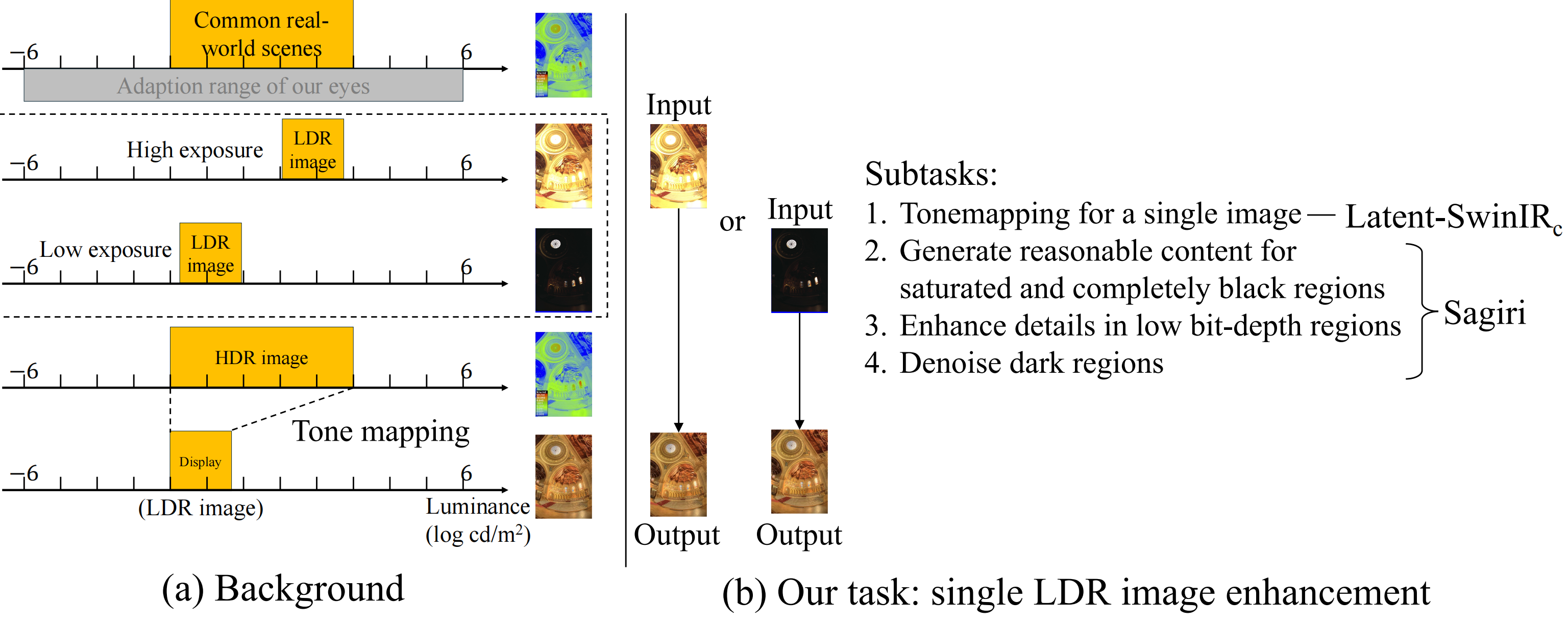

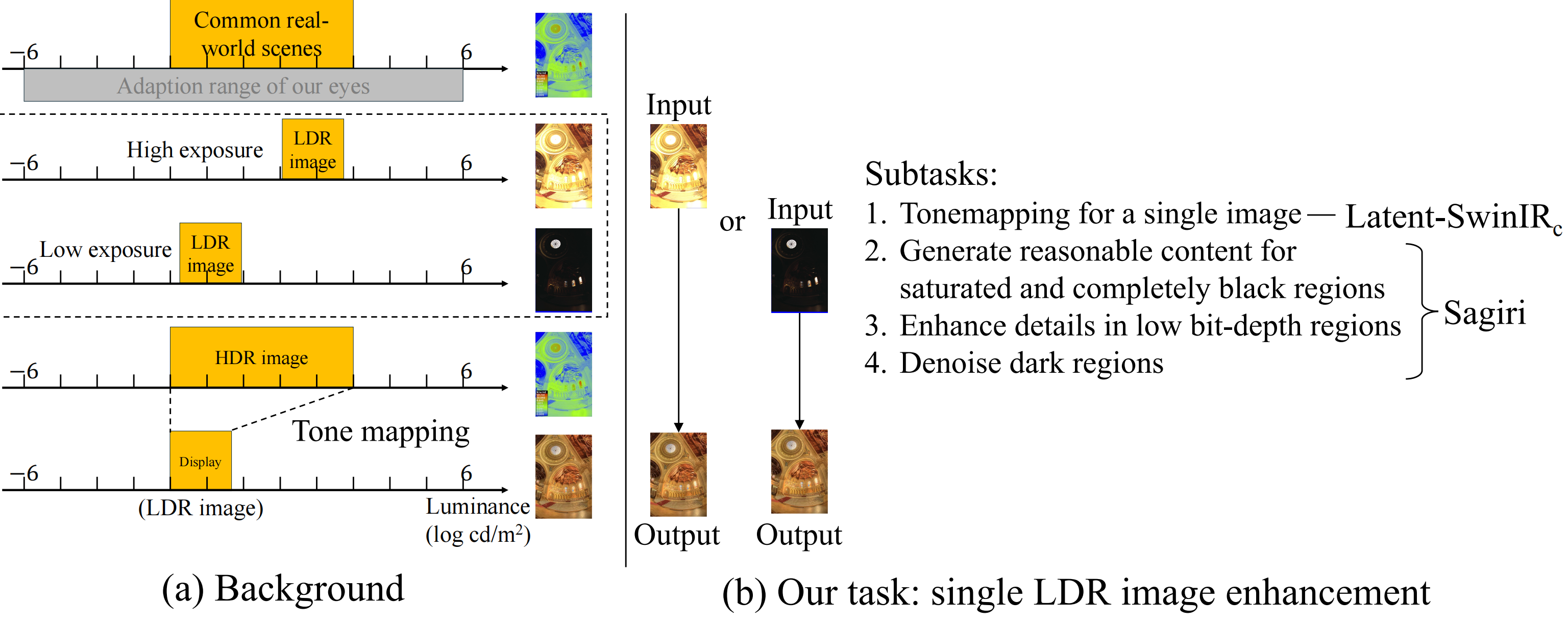

What's our task?

(a) Real-world scenarios have broad dynamic ranges. However when captured by normal low bit mobile camera, the chosen exposure may often face over-saturated bright regions or heavily quantized dark areas with strong noise. (b) Existing works on mis-exposed/low light image enhancement, multi-exposure HDR (high dynamic range) reconstruction and single-exposure HDR reconstruction can enhance SDR (standard dynamic range) images with no or small area of missing content, they are not designed to deal with large dynamic range extreme areas. (c) As a result, such methods often create blurry and unnatural imagery at dynamic range extremes. In this work, we aim to enhance these exposure-limited situations by analyzing and decomposing the complex task into the following challenges:

(a) color and brightness mapping, (b) denoising of dark areas, (c) detail enhancement in low bit-depth regions, and (d) content generation for saturated or near-black regions.

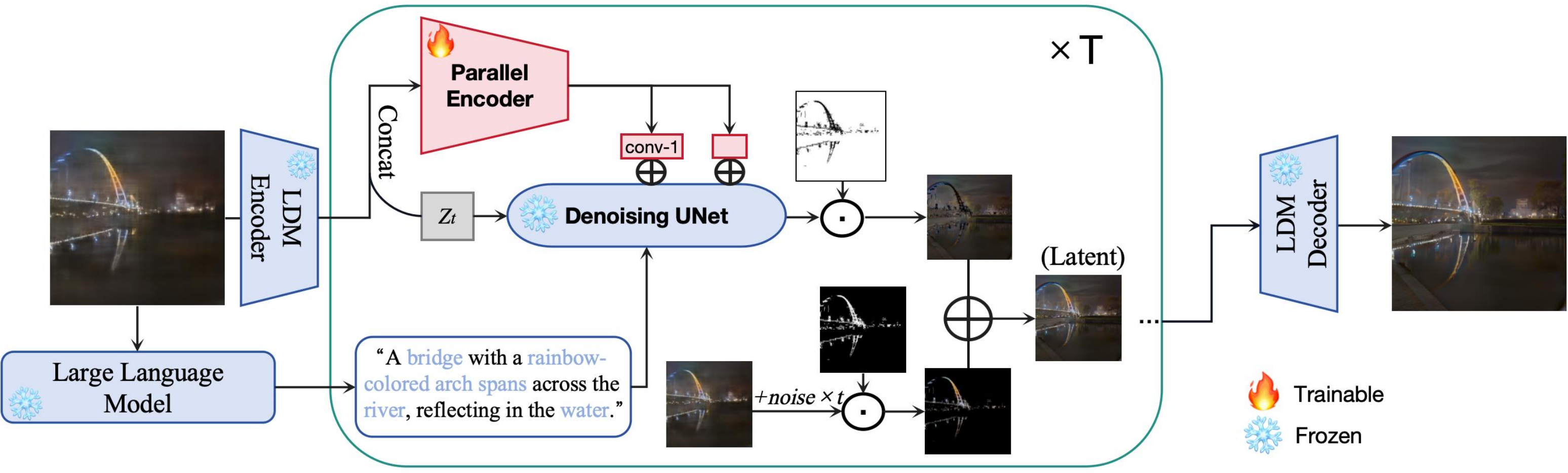

Methodology of Sagiri

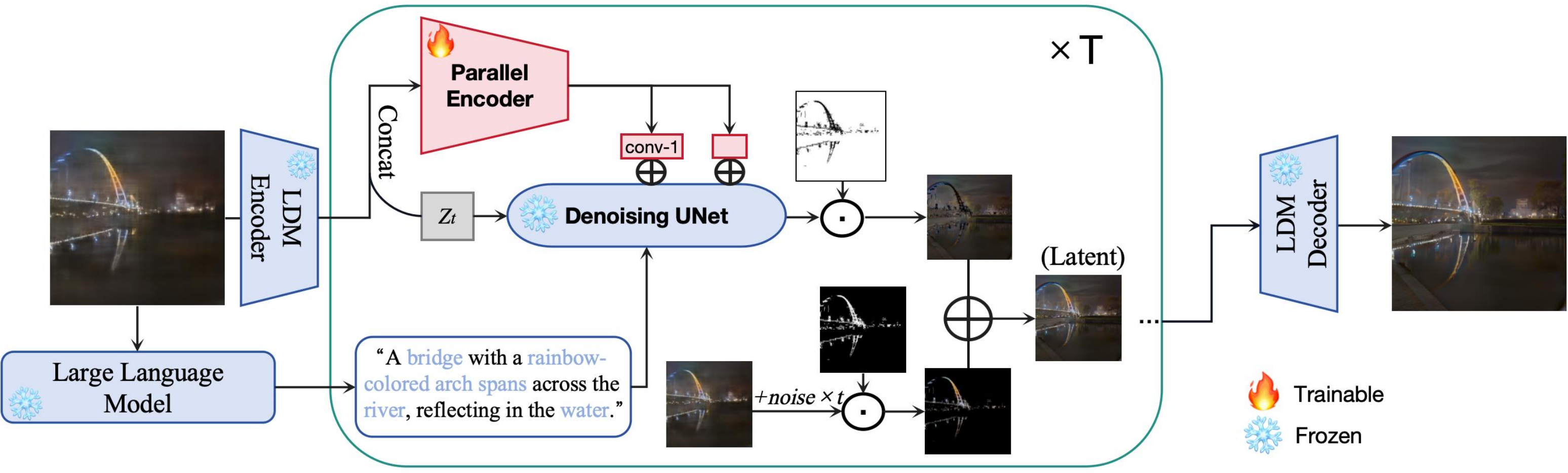

Capturing the full dynamic range of natural scenes presents a significant challenge in photography, often resulting in SDR images with over and under-exposed areas, where content details are significantly diminished.

In our project, we propose Sagiri model which is a versatile tool for fine-tuning restored results, generating more accurate details in known regions, and producing high-quality content in unknown regions. Additionally, it allows users to specify where and what to synthesize in areas lacking content, enhancing user engagement and control.

Project page template is borrowed from AnimateDiff..